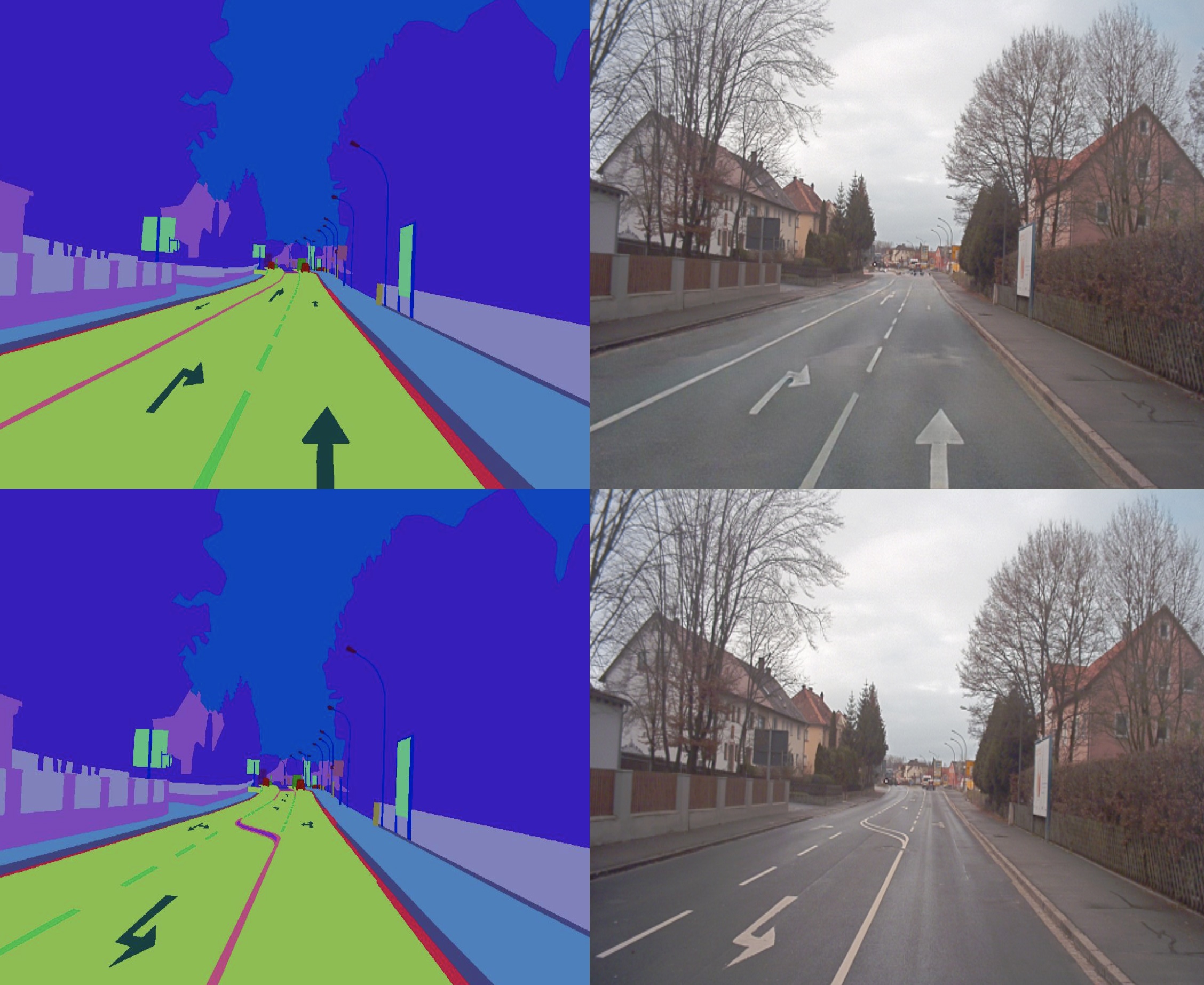

Deepfaking uses deep learning artificial intelligence (AI) to generate fake photo-realistic images "to test countless scenarios".

Oxbotica can generate thousands of these in minutes, allowing it to change weather, cars, buildings and time of day - thus exposing AVs to "near infinite variations of the same road scene" without the need for real-world testing.

While deepfake techniques have been used to mislead, shock and scam by realistically doctoring video footage to make it seem as though someone has said or done something they have not, the company uses it to reproduce scenes in adverse conditions - even putting rain water on lenses - or to confront vehicles with rare occurrences.

Oxbotica already users gamers in its R&D and believes that deepfake algorithms can help make AVs safer.

“Using deepfakes is an incredible opportunity for us to increase the speed and efficiency of safely bringing autonomy to any vehicle in any environment," said Paul Newman, co-founder and CTO at Oxbotica.

"What we’re really doing here is training our AI to produce a syllabus for other AIs to learn from. It’s the equivalent of giving someone a fishing rod rather than a fish. It offers remarkable scaling opportunities."

He suggests there is no substitute for real-world testing but suggests that the AV sector has become concerned with the number of miles travelled "as a synonym for safety".

"And yet, you cannot guarantee the vehicle will confront every eventuality, you’re relying on chance encounter," he says.

Oxbotica says the technology can reverse road signage or 'class switch' - which is where an object such as a tree is replaced by another, such as a building - or change the lighting of an image to mimic different times of the day or year.

The company says: "The data is generated by an advanced teaching cycle made up of two co-evolving AIs, one is attempting to create ever more convincing fake images while the other tries to detect which are real and which have been reproduced."

Engineers have designed a feedback mechanism which means both AIs compete to outsmart each other.

"Over time, the detection mechanism will become unable to spot the difference, which means the deepfake AI module is ready to be used to generate data to teach other AIs."